It's been a great experience working with the Data, Analytics and Learning MOOC (DALMOOC) from EdX at https://www.edx.org/course/data-analytics-learning-utarlingtonx-link5-10x#.VJO6xF4AA. I'm so glad my boss found it and encouraged me to take it (Although I started only in Week 5 of the 9-Week course). It was not easy, nevertheless, it was a very rewarding experience. I would think an estimated 5 hours/week would not be sufficient to gain all the competencies, especially as the topics become tougher in the later weeks.

I liked the overall design of the course where the contents progress smoothly, starting from a general introduction of learning analytics, getting started with data analysis to deeper topics like social network analysis, prediction modeling and text mining. Sadly, I jumped back and forth not following the flow, since I started late. But that's the best part, because the course is designed to be flexible, as in, I can directly access topics which interest me the most, without a need to follow the order. I also liked the fact there were ways to create artifacts for future use and easy sharing, like my blog which I created exclusively for this course!

The contents were good and aptly selected. There are deeper stuff in each of these topics, but this course provides the basic foundation, based on which we can explore further. I found Weeks 5 & 6 to be content-rich which was necessary to understand many concepts in Weeks 7 & 8. Activities with specific points allotted in Weeks 5&6 were good, I wish there were more in other topics to test our understanding (but better organized and with some tips).The voices from the field about different research taking place in this field are very useful bonus content.

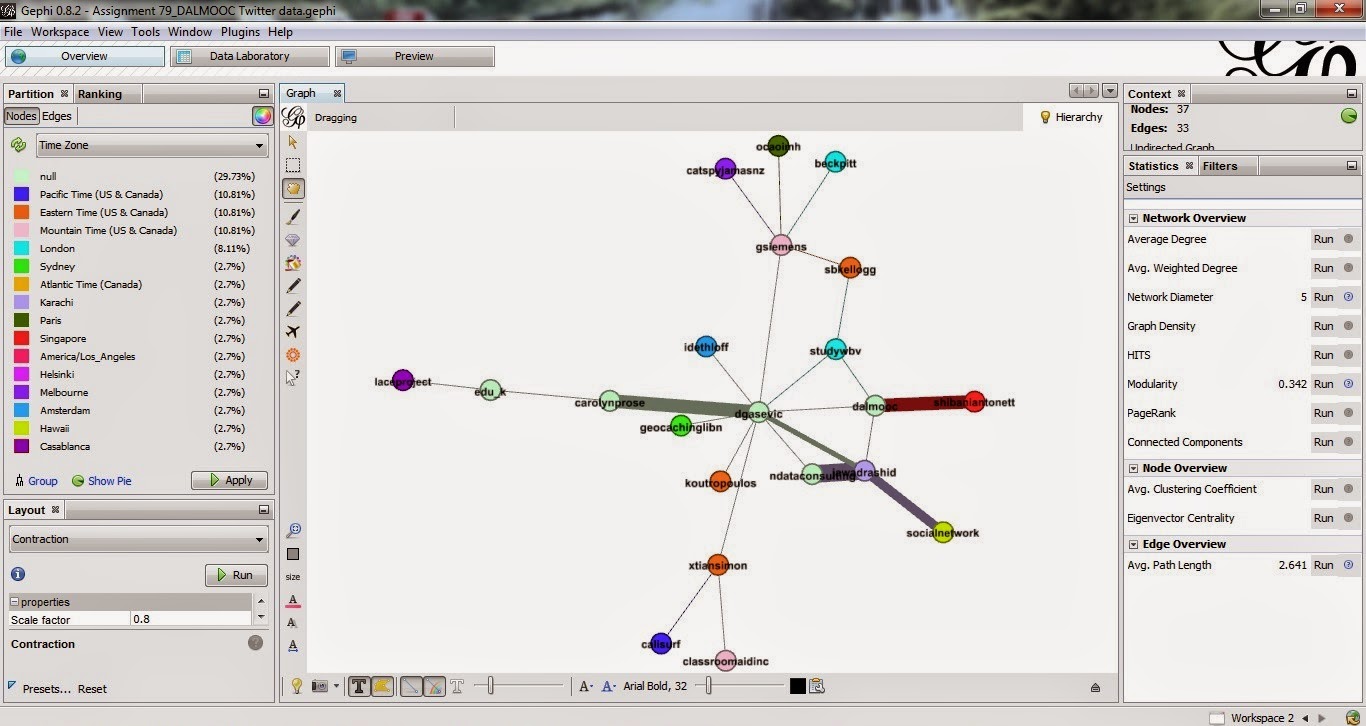

The ways to interact were well-planned and executed. The discussion forum was so useful and the students were eager to jump in to answer questions and help others. Connecting with twitter helped us to connect to instructors and other course mates with ease. I din't have luck to go into the collaborative chat though. Along with the different options of connecting with social networks like Facebook and twitter (especially in Prosolo), I personally prefer to add in LinkedIn,Google groups as well.

The instructors asked us to reflect more than to see the different activities only as homework, and not to challenge ourselves to do everything, unfortunately, both of which I was doing at times :( This is what happens when we skip some initial weeks where important instructions are given. Anyways, considering the fact that this is the first MOOC I've ever taken, I give credit to myself for doing reasonable well. Yay :)

In terms of improvements that I would want to see in future courses, most of them are related to accessibility. Most available options, though explained upfront during orientation could be better organized. For example, the different contents in EdX that were in tabbed format were not much noticeable. I used to go to Prosolo every time to see all the contents since I thought EdX didn't have them all (how stupid of me, lol). Another big difficulty when I first started using EdX is to identify which video I should see first in a chapter. I would often see one, and go to the next as given in alphabetical order and then realize that I should have watched the second one first. It would be good if the course contents are numbered and ordered that way!

The option to download handouts was not given in all weeks, so giving that would help. Making the bazaar assignments more accessible would also help students. Rather than linking to en external assignment bank (which didn't have all the weeks' assignments), it would be better if it's integrated within EdX or Prosolo. I discovered late that Quickhelper has two different options like Question and Discussion (A tip while hovering could help, maybe). And I didn't know if I should post under each week's course or the discussion forum link (I later came to know they both link to the same place). Probably, consider any one integrated place for all discussion? Of course, as our instructors talked about future plans, it would be good to see real-time analysis to check who stands where in the network and whom we should get connected to for better access.

Those were some suggestions I could think of to overcome the difficulties I faced while taking this course. I think these would be definitely looked after in future courses. For the construction of such a course for first time, it is so well done. I've learned so much in so little time (miles to go, yet). Big appreciation to all instructors who put in so much time and effort to bring it together. Thank you so much!

After toiling for days to learn, do assignments and acquire competencies, it's now time to relax . And don't forget to connect to peers. Happy holidays everyone!

Follow @ShibaniAntonett